#source link has instructions on how to use this program

Explore tagged Tumblr posts

Text

Softimage XSI EXP for Half-Life 2

#half life#half life 2#softimage#source link has instructions on how to use this program#looks like a pain in the ass to set up though#I would just use the SourceIO plugin for Blender instead

8 notes

·

View notes

Text

complete beginner's guide to [kpop] giffing, as explained by me!

keep in mind everyone has a different style and process, so there are some things in mine that may not feel intuitive or helpful - if so, don't include it! if you can make the gif, and have fun doing it, that's good enough. this post also assumes interest in specifically kpop giffing, but can for the most part apply to other content as well. i would just recommend different sharpening and coloring for those.

separated by headings (not images, since i need those... for examples) - if you are skipping to a specific section, look for the orange text of what you are looking for!

table of contents: - picking your programs - additional tools and programs (optional) - finding files - importing to photoshop (vapoursynth, screencaps, etc) - photoshop shortcuts and actions - sharpening - coloring - export settings - posting on tumblr

programs:

if you already have photoshop / your giffing program installed and set up, go ahead. if you don't, i would recommend looking here to get photoshop. please make sure you follow instructions carefully and safely! i do know photopea is an alternative people use, and i'm sure there are others. i unfortunately don't know any tutorials to link and probably won't be much help in regards to those programs, but i'm sure there are some floating around on tumblr!

my gif process uses photoshop, so this tutorial assumes that as well. if you use a different one, you might be able to transfer this to what you use, i'm not really sure :(

additional tools and programs: - handbrake: upscaler, found here. i make my own settings and generally only use this on lower quality sources to give vapoursynth a better chance with encoding in higher quality. - davinci resolve: a program i use to make clips appear 60fps when they are choppy or too few frames for the gif to look nice, and occasionally to do pre-photoshop coloring! i use the free version. i do not use this all the time and it is a big program, so definitely don't get it if you think you'd forget to / not want to use it. - yt-dlp: open source, downloads pretty much any file i could want quite well. i use this for most of my downloads and for subtitles as well. does require some knowledge of code, or, at the very least, willingness to troubleshoot set-up. find it here! i can share the command lines i run for: video, audio, vid + sub. - 4k+ downloader: what most people use for youtube-source sets, i believe. you have a limited number of downloads per day on the free version, thus the other downloading programs. - jdownloader2: no limit but sometimes a little slow or will refuse to download because of not being logged into an account, etc. a good download alternative if you plan on downloading a lot and yt-dlp is too much. - vlc: free program, standard on some devices, good for viewing files. - mpv: excellent file viewing, can also deinterlace and screencap (if you do not have / use vapoursynth) - vapoursynth: mac users beware, it's not optimized or set up and is a huge headache. windows users, once you get it set up, it's golden.

finding files, what to look for, and how to use them: how to use is explained here, with a focus on smooth and nice speeds! but finding the file is the first step, so let's head there.

stages: k24hrs (invite only, feel free to private dm or send an ask off anon for a file - i will do my best to get it to you); kpopbuzzhub; sharing korea torrent (requires a torrent app); twitter (shrghkqud (only has recent files) and a few other uploaders, requires much more active searching). there are a few other places you can look, but it is often more trouble than it is worth (or costs $). i am also always willing to help people find materials, so you can ask me to help you look for something or to link a source i used for whatever set.

music videos: also sometimes on k24hrs. i use vimeo a lot (color graders, directors, etc., will upload clearer versions). sharemania.us has some kpop mvs, typically bigger groups (i.e. blackpink). this is a place i check for ggs. and if none of these places or searching for torrents on btdigg, or on google in korean works, i just download the highest quality setting from youtube.

other types: like vlogs and fancams, normally directly downloaded from youtube, instagram, or twitter using yt-dlp or sites specifically for the app (i.e. twittervideodownloader).

what do i look for? 1080i for stages (or 1080p) are often super nice as they're a .ts. 2160p/4k is often ideal, but it also depends on your computer and what you are comfortable working with! generally - not always due to ai upscale - a bigger file size (in the gbs, high mbs like 800) is better and has more detail that will look clear when you work on it. older stages (2nd gen and before, some 3rd gen) and music videos often always are lower quality due to camera quality, and much harder to find. if you have to use the youtube upload for a stage, it is definitely doable, but it may be slightly disappointing in the quality you want to achieve. it depends on what you're comfortable posting and making!

how do i use them? if you use vapoursynth, scenedetect (encode/process whole video) or timestamp (just a small clip) your file - if upscaling, using davinci resolve, or any other pre-processing, do that first. it will pull up a resizer and a program and once you encode, it will give you an output file using the size and settings you put. this will import to photoshop. if using mpv or another screencapping method, take your screencaps (again, all pre-processing first) and prepare to import. there are two ways to do that for screencaps. you can also just watch whatever you downloaded, i'm guilty of downloading concert files just for fun 😅

in the next sections, i will be using four different files of varying quality and sources to explain my steps. hopefully that is helpful!

example file 1 (4k and 60fps, obtained using yt-dlp) example file 2 (1080i, obtained from k24hrs) example file 3 (1080p HD, obtained using yt-dlp) example file 4 (pulled from the gg archive i use, master)

importing to photoshop (vapoursynth and mpv explained): using example file 1, i am processing in vapoursynth - i always do one extra second before and after the clip i actually want so it doesn't cut off any frames i'd like. i adjust my sizes based on what works best for tumblr (540px for wide, 268px for 2 column, and 178 for 3 column). i always use finesharp 1.5 (this setting is up to you! i used to use .7, so totally ok if it changes over time, too!) when you export in vapoursynth, you need the y4m header. i use export to mov preset.

when importing to photoshop using a video (so vapoursynth, video files, not screencaps):

i recommend making your selected range a little bigger than the frames you want so they don't accidentally not get included. delete any extra frames while in the frame animation and then turn it into video timeline. i turn all my layers into a smart object (select them all by clicking the bottom layer and then shift-clicking the top one, or use (on windows) ctrl + alt + a to have it select faster). set timeline framerate (if you want to, i always use 60).

now your smart object is ready to sharpen and color!

using example file 2, i am processing mpv - i hit 'd' until deinterlace is on 'auto'. find the clip you'd like and hit your screencap shortcut (alt+s) for me - your screencaps should be super clear, it depends on how you set up your software (if mpv, what compression you told it). screencaps can take up a ton of storage so i recommend only screencapping what you need and deleting them after. when you hit your shortcut, play the file to the end of the clip you went and hit the screencap shortcut again to stop. your frames should be in the folder you designated as pngs now. delete extra frames now!

you now have two options: import as is, which can be a little slow, or turn them into dicom files. importing as is is done through stack. it will prompt you to select what is being loaded - change Use: to folder, and let it process. it will be slow. hit ok when the file list updates.

when it is done loading: create frame animation -> make frames from layers -> reverse frames. i would crop now using the crop tool for processing speed, and then proceed to do video timeline, smart object, and frame rate. when cropping: on the top, above your document names, the second image should show up on the crop tool - this can set your dimensions. i zoom and crop screencaps using this.

the other option is DICOM files, and what i use. when you have your pngs, do alt + d in the folder (windows), type cmd, and run this command: ren *.* *.dcm. press enter. it will update the files. on macOs, just rename the file type to .dcm.

then, import as so (it will prompt you to select the folder your .dcm are in, and will not work if the files are not actually DICOM). i find it much faster than the stack import. crop when it is done importing for processing time, like the other screencap import style.

create frame animation -> make frames from layers -> video timeline -> smart object from layers -> 60 fps.

photoshop shortcuts and actions: (windows) - ctrl + alt + a: select all layers - ctrl + shift + alt + w: export as image - ctrl + alt + shift + s: save for web (legacy) - this is the gif one!

actions are imported or created, i've shared my sharpening ones before. there are plenty you can find (or make) for a variety of things, such as aligning objects to a fixed position on all docs, doing the screencap reverse and import for you, etc. they are imported using load actions - select the [downloaded] .atn file and it'll pop up.

sharpening: explained here, and has my actions (feel free to use). very important to the quality and clarity of the gif. the better the file, the clearer a gif looks, sharpening can only do so much. play around during this step!

coloring: very much the most personal taste stage of giffing. i explain my process here. in kpop giffing, we tend to focus on unwhitewashing. other giffing tends to be more aesthetic tastes and fun since the source has better colors to work with.

export settings: these are what i use! you need the 256 colors. i do not recommend lossy or interlaced. i think bicubic sharper is the clearest i have tried.

posting on tumblr: use these dimensions FOR GIFS. edits can be different. height is up to you - i would not go over 800, but i think my quality looks weird past 600 range anyways.

example gifs: yeji, from example file #1, imported using vs. uses no. 1 sharpening (altered) from my pack. colored. zhanghao, from example file #2, imported using mpv and the load via stack. uses no. 4 sharpening (altered) from my pack. colored. taeyeon, from example file #3, imported using mpv and the load via dicom. uses no. 1 sharpening from my pack. colored. eunbi, from example file #4, imported using vs. uses no. 2 sharpening from my pack. colored.

if your gifs don't look how you want right away, that's okay! it takes time. my first ones were not great either. i am always improving on and working on my gifs. good luck and have fun hehe ♡

#i do not feel that qualified to answer this so caveat im not the best. and please also take ideas from my lovely moots and their resource#tags and tutorials / shared things. they are all so good#m:tutorial#resources#long post#flashing tw#userdoyeons#awekslook#ninitual#useroro tuserflora#useranusia#userchoi

85 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

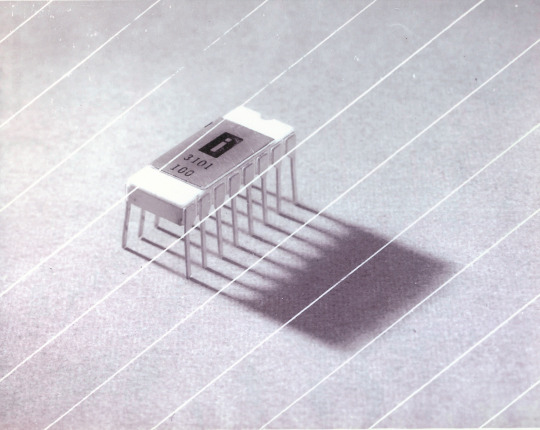

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

237 notes

·

View notes

Text

The Life Cycle of a TARDIS

How TARDISes are born, grow, and die.

🌤️ The Birth of a TARDIS

From a Seedling/Sapling

In one version, TARDISes aren't built—they're grown from seeds and/or saplings. The process begins in the Time Travel Capsule Growth Foundry (AKA a TARDIS Shipyard, the Gallifrey Black Hold Shipyard, or the "Womb").

They're put into Dimensionally Transcendental Cradles (AKA Ubbo-Sathla, or maybe Hyperlooms). This is deliberately within an area of null space, where the normal laws of physics don't apply. This is needed to nurture the TARDIS as it begins to grow because the process has to be non-linear.

(Some sources place the Foundry on Gallifrey next to the Caldera, while others say it's located away from Gallifrey to avoid the time pollution, but it's probably the former.)

And Then There's Block Transfer Computations

Some sources claim that TARDISes are actually created by block transfer computations. Put simply, BTC is a concept that allows very clever people to create objects and events in spacetime using pure mathematics.

If this is the right version, a spacetime event is created by using BTC, and then put in a Dimensionally Transcendental Cradle, which turns the event into a viable time capsule like a little spacetime microwave.

Or Maybe Both?

That's not to say a seed couldn't BE this initial spacetime event, or that perhaps BTC are applied to the seed while in the cradles to create sentience, but eh. 🤷

🍼The Growth of a TARDIS

However they start, as they grow, they're embedded with a Protyon Core/Unit, which is programmed with 'greyprints', which are like the blueprints of a house. These greyprints establish all the specifications the TARDIS needs, including essential rooms, etc. The TARDIS then begins to grow using these greyprints.

After ten years in the cradle growing, a TARDIS is moved to a Solar Workshop, where Time Lords implant exitonic circuits. These exitonic circuits are designated XX or XY, depending on what gender was given to the TARDIS in its greyprint, and also add other features like the temporal drive and chameleon circuits.

Once this process is complete, the TARDIS is considered to be fully working. It's taken to a Berthing Bay in the Dry Dimension Dockyard Cradle and registered, given a unique code. Then, it's ready to bond with a Time Lord.

💀 The Death of a TARDIS

A TARDIS has an estimated lifespan of a few thousand years, but on Gallifrey, TARDISes are like smartphones - every time a new one comes out, you'll trade in the old one. It's expected that if the pilot is upgrading or their TARDIS is otherwise dying, they will put their TARDIS 'to sleep'. This is usually achieved by having a healthy TARDIS help the dying TARDIS into the heart of a star, which allows it to get to the TARDIS Graveyard outside of space and time.

However, some TARDISes are left to decay and die from entropy. Once it's dying, a TARDIS still capable of spacetime flight will go to the TARDIS Graveyard on its own. If it can't, it will attempt to 'call out' to any pilots who have previously symbiotically linked to them for help. If no one answers, the TARDIS will die very slowly, potentially in a near-death state for thousands of years.

🪸 Footnote: A Piece of Coral

It's extremely likely that the piece of coral given to Tentoo by Ten is from the TARDIS' Protyon Unit - you can grow a new TARDIS from a sample of a female TARDIS Protyon Unit, because it contains all the instructions for growth (the greyprints). However, this process will take thousands of years, because the growing TARDIS will have to rely on a nearby Time Lord's artron biofield. In essence, it has to use the Time Lord as a source of energy. This can of course be accelerated by a cradle or some wibbly-wobbly science stuff.

Once it's grown, it will be different to a normal TARDIS, because it hasn't had the exitonic circuits implanted. Without these, the TARDIS will not have the chameleon circuit (it will appear as a featureless metal cylinder), have no gender, and cannot time travel. However, making these exitonic circuits away from Gallifrey is achievable by a Time Lord, even on a rudimentary, technologically limited planet, but it'll take a bit of time.

Gallifreyan TARDIS Biology for Tuesday by GIL

Any orange text is educated guesswork or theoretical. More content ... →📫Got a question? | 📚Complete list of Q+A and factoids →📢Announcements |🩻Biology |🗨️Language |��️Throwbacks |🤓Facts → Features: ⭐Guest Posts | 🍜Chomp Chomp with Myishu →🫀Gallifreyan Anatomy and Physiology Guide (pending) →⚕️Gallifreyan Emergency Medicine Guides →📝Source list (WIP) →📜Masterpost If you're finding your happy place in this part of the internet, feel free to buy a coffee to help keep our exhausted human conscious. She works full-time in medicine and is so very tired 😴

#doctor who#gallifrey institute for learning#dr who#dw eu#gallifrey#gallifreyans#whoniverse#TOTW: Can you drive this thing?#tardis#tardis biology#gallifreyan culture#gallifreyan lore#gallifreyan society#GIL: Gallifrey/Culture and Society#gallifreyan biology#GIL: Biology#GIL: Species/TARDISes#GIL: Biology/Foundations#GIL: Individuals/The Doctor#GIL: Gallifrey/Technology#GIL

31 notes

·

View notes

Text

It’s like…both Hylia and Rauru run into the Robot Problem, where they create/cultivate servants who end up achieving sentience (the Robots in SkSw and the Constructs in TotK) or were already sentient to begin with but were supposed to fit into this grand design to restore the order they’d created (the Sheikah, their respective Links and Zeldas). And it gets a little uncomfortable because both characters are so focused on imposing their respective ideas of order that they…don’t quite see their charges as people?

Hylia at least had the excuse that she was a primordial goddess of Light and Time and therefore had no frame of reference to emotionally connect with the mortals she was charged to protect. Her actions were ruthlessly pragmatic, but it made sense for a god totally alienated from humanity to think like that.

Meanwhile, Rauru has this sort of…naïve carelessness? He descended to earth, took the people living there as his subjects, and even fell in love with and married a mortal woman, but he never seemed to understand that his actions could have unintended and far-reaching effects on the people around him.

When confronted with how the Constructs continued to obey their orders instead of leaving to do other things, he couldn’t really muster up more than a sheepish, “Oops, my bad.” Granted, he was a ghost invisible to everyone but Link at the time, but, still, he never considered including a contingency plan in their programming to preserve themselves and do other things with their lives in the event of his own death.

And then there was him and Sonia positioning themselves as Zelda’s surrogate parents after she lost her original parents in traumatic circumstances related to the Sealing War’s aftereffects. Zelda, having to step up as Sonia’s replacement as his advisor and sole confidant to make sure he kept a level head after the queen died. Not stopping Mineru when she started talking about draconification because he was convinced it’d never come to that. Zelda effectively being primed to accept sacrificing herself a second time to preserve the order Rauru created whether he wanted that or not. And then him taking Link’s arm and replacing it without his permission while he was unconscious, because the Ultrahand is such a useful tool, why wouldn’t he be fine with a little limb graft?

Then there was the whole thing with Ganondorf. Just this catastrophic underestimation of human agency, intelligence, and capacity for cruelty. Zelda herself warned him about Ganon being more dangerous than he seemed, but Rauru waved her off (inadvertently recreating yet another Calamity-related trauma of Zelda’s). Ganondorf might be causing trouble, but in the end he was just like those other funny little mortals Rauru watched over. How could he possibly be able to understand something as divine and complicated as a Secret Stone? Only Sonia could do that, but Sonia was Special because she was so smart and wonderful and he loved her very much, so that didn’t count! Furthermore, how could he possibly come up with a plan that could blindside him and the queen? That would be silly!

(Also, note how he didn’t give any non-Sonia mortals stones until after Ganon proved any one of those funny little guys could master using one without any instruction from Rauru, lmao)

And then the circumstances of Rauru’s own death, and the consequences that had for everyone around him well after he’d faded to myth. Rauru assumed the “consequences” Ganondorf threatened him with were limited to giving up his own life, when what actually happened was that the people of Hyrule were left to deal with Ganon punishing them for Rauru’s hubris, poisoning the land again and again in rage at his imprisonment, while the people themselves had to figure out on their own how to beat back each wave of his bilious rage with no record or understanding of its source. Zelda being left with no guidance for how to return home, forced to make a drastic decision. Mineru, still alive, but unable to intervene was she was just a soul without a body.

Like…I don’t think he’s evil, much less malicious, but the way the story panned out reminded me a lot of the way Rose Quartz kept accidentally hurting the people who loved her because it didn’t come naturally to her to think of other people as equals, or to consider how her actions made them feel. She loved Pearl, but she didn’t acknowledge Pearl’s baggage from their former relationship as master and servant because she’d assumed they’d moved past that simply by never speaking of it anymore. She loved Greg, but she initially didn’t treat him like a real person. She tried as hard as she could to relate to others and understand them, but in the end she committed one last tragic act of thoughtlessness. She thought would be creating this special, wonderful person free of her flaws who would make the world so much better solely by existing and being himself (at least in part, I think, because she hated herself and thought everyone would be happier without her burdening them with her guilt and her selfish desire to run from her past)…only to leave everyone grappling with the emotional fallout of her no longer existing, including her son, who was stuck dealing with the legacy she’d left behind even though that was never her intention.

She didn’t actively try to sabotage her son’s life from beyond the grave. She didn’t think that the things she’d done would catch up to him, that her old family and enemies would treat him like an interchangeable replacement for her, or that her friends would treat him in strange ways out of grief at losing her. It just…never occurred to her.

But the thing is, I’m not sure if Rauru was intentionally written that way. If he was, the narrative wasn’t really interested in dwelling on the consequences of that, instead treating him and Sonia more like figureheads of this lost, idyllic past that the present must grieve and then strive to follow the example of, even if the present was suffering due to paying the debts of their ancestors’ carelessness.

#legend of zelda#loz thoughts#totk thoughts#totk spoilers#steven universe spoilers#long post#rauru#hylia

195 notes

·

View notes

Text

She wakes up at 7:00 a.m., first taking out the dog and then a light breakfast. Leaving her three bedroom home with a kiss to her husband, her shiny reasonably new car finds its way through the streets and she makes it to the office after a quick stop for her favorite coffee beverage.

Click click click as she walks through the marble halls, passing by colleagues and supervisors, everyone waves, everyone smiles.

What she makes in a month would have eradicated so much inconvenience and pain in my life. I must not do my poor mathematics to estimate the annual income, or the combined family wages. Having occasional answers unrequested from accidental Facebook encounters provides too much candy for thought.

As the morning janitor passes by, hearing occasional sounds of sweeping, vacuuming or coworkers discussing relevant events of the day, she is hard at work door ajar in case she needs to provide urgent and immediate instructions to underlings.

Clack clack. Click clack. Boom boom boom boom boom boom boom, really, due to the always-on microphone of the speakerphone pressed hard against the keyboard. -- Covered in what must be a copy of The Godfather or perhaps a purring cat.

Moments later, across the country, my car has made it one more morning. Relatively free of nausea, I locate my seat. After several automatic updates, screens are given birth. Must not tarry as those of us who are in on time pretty much have to cover for another, who might be going to the kitchen for the second time before even clocking in.

A familiar, shrieking tone rings, a forcibly mandated audio alert. Depending on how a company's program decides to deliver it, it could be muted or the loudest thing on God's Earth.

Scrolling, my obligation leads me to the source of the decibel. So early in the morning, I activate all of my biologically supplied experience and education, brushing away thoughts of unpaid bills, medical concerns, and things I've just never gotten around to.

My hindered eyes, miraculously not yet defeated, peer into the electromagnetic wavelengths that I'm allowed to pass witness on. Neurons, struggling to obey laws of meat and liquid, hold tight and majesty appears.

WHEN IS IT

BEEN TIME JOHN WAS AND NOW I AM WHEN IS OF IT

KENT YOU DO FIR HERS IS l?

UTGêNT!!!!!!! CALL ME 555-thisnumberdoesntwork

cc: Managers Who Aren't In Your Department, Employee No Longer Here (bouncing), Contact At Competitor (bouncing), two names very close to mine.

Attached: unopenable pdf (link to online drive on the senders desktop)

PleumFairy™ Keeping The Handshake In Smiles! --An Irrelevant Company™

2 notes

·

View notes

Note

Do you have any advice for autism & meal prepping?

Hi there,

I found some sources that I hope will be helpful. This talks about meal prep in general:

Here is a Reddit thread discussing meal planning and preparation

Link to Thread

Here is an excerpt from an article going into more detail about food preparation, it’s a bit long, so I apologize:

There are a lot of cooking resources on the Internet. These three are particularly clear with a lot of steps and photographs, and no confusing phrases like "until it looks good to you."

Step-by-Step Cook offers in-depth, detailed, start-to-finish instructions on a wide variety of recipes. This site assumes that the users have no prior cooking experience, and includes photos of what each step should look like.

Cooking for Engineers breaks recipes down very concretely with tables showing which steps are done in order and which are done at the same time. It also has information on cooking gadgets.

Cooking With Autism has some step-by-step sample recipes, and offers a book for purchase.

If following recipes or cooking a lot of different things just isn't going to happen for you, here are some ideas:

Learn how to cook one kind of thing and then do variations on it. For example, learn how to use a rice cooker to make rice and steamed vegetables. Then you can just change the kind of vegetables you steam in it.

Find healthy frozen dinners or other prepared foods that can be heated in a microwave.

Learn what kinds of raw foods or pre-cooked foods will give you a healthy diet and then just make plates of them. For example, raw vegetables, canned beans, canned fish, fruits, bread, nuts, and cheese are all things that can be eaten without needing to touch a stove or put ingredients together.

Make one day a week be "cooking day" and make all of your meals for the week on that day. Reheat them in the microwave or oven, or eat them cold the other six days.

Trade with someone for cooking. If there's something you're good at but someone else finds hard, maybe you can trade with them. For example if you like programming computers, maybe you could build a web site for someone and keep it updated, and in return they could cook for you a few days a week.

Keep some "emergency food" stocked so you don't go hungry on days when preparing food isn't manageable. For example, nuts and dried fruits are nutritious, high in calories, and can last a long time.

The link to this article will be below if you want to read more:

I hope these sources help. Thank you for the inbox. I hope you have a wonderful day/night. ♥️

42 notes

·

View notes

Text

Here is the thing that bothers me, as someone who works in tech, about the whole ChatGPT explosion.

The thing that bothers me is that ChatGPT, from a purely abstract point of view, is really fucking cool.

Some of the things it can produce are fucking wild to me; it blows my mind that a piece of technology is able to produce such detailed, varied responses that on the whole fit the prompts they are given. It blows my mind that it has come so far so fast. It is, on an abstract level, SO FUCKING COOL that a computer can make the advanced leaps of logic (because that's all it is, very complex programmed logic, not intelligence in any human sense) required to produce output "in the style of Jane Austen" or "about the care and feeding of prawns" or "in the form of a limerick" or whatever the hell else people dream up for it to do. And fast, too! It's incredible on a technical level, and if it existed in a vacuum I would be so excited to watch it unfold and tinker with it all damn day.

The problem, as it so often is, is that cool stuff does not exist in a vacuum. In this case, it is a computer that (despite the moniker of "artificial intelligence") has no emotional awareness or ethical reasoning capabilities, being used by the whole great tide of humanity, a force that is notoriously complex, notoriously flawed, and more so in bulk.

-----

During my first experiment with a proper ChatGPT interface, I asked it (because I am currently obsessed with GW2) if it could explain HAM tanking to me in an instructional manner. It wrote me a long explanatory chunk of text, explaining that HAM stood for "Heavy Armor Masteries" and telling me how I should go about training and preparing a character with them. It was a very authoritative sounding discussion, with lots of bullet points and even an occasional wiki link Iirc.

The problem of course ("of course", although the GW2 folks who follow me have already spotted it) is that the whole explanation was nonsense. HAM in GW2 player parlance stands for "Heal Alacrity Mechanist". As near as I've been able to discover, "Heavy Armor Masteries" aren't even a thing, in GW2 or anywhere else - although both "Heavy Armor" and "Masteries" are independent concepts in the game.

Fundamentally, I thought, this is VERY bad. People have started relying on ChatGPT for answers to their questions. People are susceptible to authoritative-sounding answers like this. People under the right circumstances would have no reason not to take this as truth when it is not.

But at the same time... how wild, how cool, is it that, given the prompt "HAM tanking" and having no idea what it was except that it involves GW2, the parser was able to formulate a plausible-sounding acronym expansion out of whole cloth? That's extraordinary! If you don't think that's the tightest shit, get out of my face.

----

The problem, I think, is ultimately twofold: capitalism and phrasing.

The phrasing part is simple. Why do we call this "artificial intelligence"? It's a misnomer - there is no intelligence behind the results from ChatGPT. It is ultimately a VERY advanced and complicated search engine, using a vast quantity of source data to calculate an output from an input. Referring to that as "intelligence" gives it credit for an agency, an ability to judge whether its output is appropriate, that it simply does not possess. And given how quickly people are coming to rely on it as a source of truth, that's... irresponsible at best.

The capitalism part...

You hear further stories of the abuses of ChatGPT every day. People, human people with creative minds and things to say and contribute, being squeezed out of roles in favor of a ChatGPT implementation that can sufficiently ("sufficiently" by corporate standards) imitate soul without possessing it. This is not acceptible; the promise of technology is to facilitate the capabilities and happiness of humanity, not to replace it. Companies see the ability to expand their profit margins at the expense of the quality of their output and the humanity of it. They absorb and regurgitate in lesser form the existing work of creators who often didn't consent to contribute to such a system anyway.

Consequently, the more I hear about AI lately, the more hopeful I am that the thing does go bankrupt and collapse, that the ruling goes through where they have to obliterate their data stores and start over from scratch. I think "AI" as a concept needs to be taken away from us until we are responsible enough to use it.

But goddamn. I would love to live in a world where we could just marvel at it, at the things it is able to do *well* and the elegant beauty even of its mistakes.

#bjk talks#ChatGPT#technology#AI#artificial intelligence#just thinking out loud here really don't mind me

23 notes

·

View notes

Text

Taking a look back over the documentation that has been released, it seems fairly simple to do. If you have a Home Assistant setup and are willing to buy suspiciously cheap electronics from Alibaba, here's what I've found.

This really covers all the effort for the work done by the official Home Assistant team. Section 2 covers the back end portion of the tech in the most depth, outside of visiting the various GitHub pages linked. Seems like the majority of the work was sponsored by Nabu Casa, the owners of Home Assistant, and subsequently released as open source programs. I trust Nabu Casa to have not bundled anything weird in, but make that determination for yourself.

This is the How-To guide using a $13 ESP32 Arduino based speaker/microphone. Unfortunately, this device is sold out at this point and I am skeptical if it will ever get a wholesale re-release. Other ESP32 based boards may work but may also require deviation from the printed guide.

This is a second project, based on a different but still out of stock speaker/microphone combo.

All in all, it seems much more viable than I assumed it was given my info from a few years ago. Wake words have been figured out, the intention system made by Nabu Casa allows for custom instructions, and it can all be hosted locally assuming you have access to one of 2 now out of stock boards.

🥹

113K notes

·

View notes

Text

DBMS Tutorial for Beginners: Unlocking the Power of Data Management

In this "DBMS Tutorial for Beginners: Unlocking the Power of Data Management," we will explore the fundamental concepts of DBMS, its importance, and how you can get started with managing data effectively.

What is a DBMS?

A Database Management System (DBMS) is a software tool that facilitates the creation, manipulation, and administration of databases. It provides an interface for users to interact with the data stored in a database, allowing them to perform various operations such as querying, updating, and managing data. DBMS can be classified into several types, including:

Hierarchical DBMS: Organizes data in a tree-like structure, where each record has a single parent and can have multiple children.

Network DBMS: Similar to hierarchical DBMS but allows more complex relationships between records, enabling many-to-many relationships.

Relational DBMS (RDBMS): The most widely used type, which organizes data into tables (relations) that can be linked through common fields. Examples include MySQL, PostgreSQL, and Oracle.

Object-oriented DBMS: Stores data in the form of objects, similar to object-oriented programming concepts.

Why is DBMS Important?

Data Integrity: DBMS ensures the accuracy and consistency of data through constraints and validation rules. This helps maintain data integrity and prevents anomalies.

Data Security: With built-in security features, DBMS allows administrators to control access to data, ensuring that only authorized users can view or modify sensitive information.

Data Redundancy Control: DBMS minimizes data redundancy by storing data in a centralized location, reducing the chances of data duplication and inconsistency.

Efficient Data Management: DBMS provides tools for data manipulation, making it easier for users to retrieve, update, and manage data efficiently.

Backup and Recovery: Most DBMS solutions come with backup and recovery features, ensuring that data can be restored in case of loss or corruption.

Getting Started with DBMS

To begin your journey with DBMS, you’ll need to familiarize yourself with some essential concepts and tools. Here’s a step-by-step guide to help you get started:

Step 1: Understand Basic Database Concepts

Before diving into DBMS, it’s important to grasp some fundamental database concepts:

Database: A structured collection of data that is stored and accessed electronically.

Table: A collection of related data entries organized in rows and columns. Each table represents a specific entity (e.g., customers, orders).

Record: A single entry in a table, representing a specific instance of the entity.

Field: A specific attribute of a record, represented as a column in a table.

Step 2: Choose a DBMS

There are several DBMS options available, each with its own features and capabilities. For beginners, it’s advisable to start with a user-friendly relational database management system. Some popular choices include:

MySQL: An open-source RDBMS that is widely used for web applications.

PostgreSQL: A powerful open-source RDBMS known for its advanced features and compliance with SQL standards.

SQLite: A lightweight, serverless database that is easy to set up and ideal for small applications.

Step 3: Install the DBMS

Once you’ve chosen a DBMS, follow the installation instructions provided on the official website. Most DBMS solutions offer detailed documentation to guide you through the installation process.

Step 4: Create Your First Database

After installing the DBMS, you can create your first database. Here’s a simple example using MySQL:

Open the MySQL command line or a graphical interface like MySQL Workbench. Run the following command to create a new CREATE DATABASE my_first_database;

Use the database: USE my_first_database;

Step 5: Create Tables

Next, you’ll want to create tables to store your data. Here’s an example of creating a table for storing customer information:

CREATE TABLE customers ( 2 customer_id INT AUTO_INCREMENT PRIMARY KEY, 3 first_name VARCHAR(50), 4 last_name VARCHAR(50), 5 email VARCHAR(100), 6 created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP 7);

In this example, we define a table named customers with fields for customer ID, first name, last name, email, and the date the record was created.

Step 6: Insert Data

Now that you have a table, you can insert data into it. Here’s how to add a new customer:

1 INSERT INTO customers (first_name, last_name, email) 2VALUES ('John', 'Doe', '[email protected]');

Query Data

To retrieve data from your table, you can use the SELECT statement. For example, to get all customers:

1 SELECT * FROM customers;

You can also filter results using the WHERE clause:

SELECT * FROM customers WHERE last_name = 'Doe';

Step 8: Update and Delete Data

You can update existing records using the UPDATE statement:

UPDATE customers SET email = '[email protected]' WHERE customer_id = 1;

To delete a record, use the DELETE statement:

DELETE FROM customers WHERE customer_id = 1;

Conclusion

In this "DBMS Tutorial for Beginners: Unlocking the Power of Data Management," we’ve explored the essential concepts of Database Management Systems and how to get started with managing data effectively. By understanding the importance of DBMS, familiarizing yourself with basic database concepts, and learning how to create, manipulate, and query databases, you are well on your way to becoming proficient in data management.

As you continue your journey, consider exploring more advanced topics such as database normalization, indexing, and transaction management. The world of data management is vast and full of opportunities, and mastering DBMS will undoubtedly enhance your skills as a developer or data professional.

With practice and experimentation, you’ll unlock the full potential of DBMS and transform the way you work with data. Happy database management!

0 notes

Text

Risk Management in Late Phase Clinical Trials: Pharmacovigilance and Safety Monitoring

In the lifecycle of a pharmaceutical product, late-phase clinical trials—specifically Phase IIIb and Phase IV studies—represent a critical period for ensuring the continued safety, efficacy, and reliability of a treatment in real-world use. Unlike early-phase trials conducted in controlled environments with selective populations, late-phase studies involve broader, more diverse patient groups and reflect real-world usage patterns. This makes risk management, pharmacovigilance, and safety monitoring indispensable components of the process.

As regulators, healthcare providers, and patients demand higher standards for ongoing safety assurance, pharmaceutical companies must implement robust systems to identify, assess, and mitigate risks throughout late-stage development.

The Nature of Risk in Late Phase Clinical Trials

While early-phase trials focus on discovering therapeutic effects and optimal dosages, late-phase clinical trials are aimed at understanding how the drug performs in real-world settings, over longer durations, and in a more heterogeneous patient population.

Key sources of risk include:

Uncommon or long-term adverse drug reactions (ADRs)

Interactions with other medications or conditions

Off-label usage and patient misuse

Population-specific effects in pediatrics, geriatrics, or those with comorbidities

Since some of these risks may not manifest until the product is widely used, late phase trials provide the ideal framework for continuous safety evaluation.

Pharmacovigilance: The Cornerstone of Post-Market Safety

Pharmacovigilance (PV) refers to the science and activities related to the detection, assessment, understanding, and prevention of adverse effects or any other drug-related problem. In late-phase clinical trials, pharmacovigilance serves as the backbone of risk management.

Core components include:

Adverse Event Reporting: Capturing and classifying any negative health outcome experienced by participants, whether causally linked or not.

Signal Detection and Evaluation: Using statistical methods and data mining to identify patterns or clusters of adverse events that may indicate a safety concern.

Risk Evaluation: Determining the likelihood, severity, and relevance of potential risks in the context of the drug's benefits.

Risk Minimization Strategies: Implementing measures such as revised dosing instructions, boxed warnings, or restricted use programs to reduce patient exposure to known risks.

In many jurisdictions, pharmacovigilance in late phase trials is not just best practice—it’s a legal obligation.

Global Regulatory Requirements for Safety Monitoring

Various international regulatory authorities mandate structured safety reporting and risk management protocols during late-phase clinical trials:

FDA (USA): Requires sponsors to submit Periodic Adverse Drug Experience Reports (PADERs) and Risk Evaluation and Mitigation Strategies (REMS) when necessary. All serious and unexpected adverse events must be reported under 21 CFR 314.80.

EMA (Europe): Enforces Good Pharmacovigilance Practices (GVP) and mandates Periodic Safety Update Reports (PSURs) and Risk Management Plans (RMPs) under Directive 2001/83/EC.

MHRA (UK): Requires compliance with the Yellow Card Scheme, independent of EMA post-Brexit, with similar requirements for signal management and patient safety.

PMDA (Japan): Mandates Good Post-Marketing Study Practices (GPSP) and periodic re-evaluation of safety for up to 8 years post-approval.

Each of these agencies has specific timelines, formats, and criteria for safety data collection and reporting, necessitating a globally coordinated PV strategy.

Safety Monitoring Tools and Methodologies

To enhance safety monitoring in late-phase clinical trials, sponsors and CROs (Contract Research Organizations) utilize a mix of proactive and reactive tools:

1. Electronic Data Capture (EDC) and eCRFs

Digital systems enable near real-time adverse event documentation, streamlining communication between clinical sites and safety personnel.

2. Data Safety Monitoring Boards (DSMBs)

Independent committees that periodically review cumulative safety data and make recommendations on trial continuation, modification, or termination.

3. Signal Detection Algorithms

Automated tools apply disproportionality analysis (e.g., PRR, ROR) to detect unusual patterns in large safety databases such as FAERS or EudraVigilance.

4. Patient-Reported Outcomes (PROs)

Capture of subjective experiences such as fatigue, nausea, or quality-of-life impacts, offering deeper insight into treatment tolerability.

5. Real-World Evidence (RWE)

Supplemental safety data gathered from electronic health records, registries, and claims databases can complement traditional trial findings.

Risk Management Planning in Practice

An effective risk management plan (RMP) in late-phase trials should be customized based on the therapeutic area, patient population, and risk profile of the drug.

Key elements include:

Identification of known and potential risks

Summary of safety findings from pre-approval phases

Ongoing risk minimization activities (e.g., patient education)

Post-marketing study commitments and timelines

Continuous benefit-risk assessment and mitigation strategies

In some cases, post-marketing risk-sharing agreements with payers or REMS programs may also be developed based on safety data from late phase studies.

Case Example: Pharmacovigilance in Action

Consider a new oral anticoagulant approved for stroke prevention in atrial fibrillation. Phase III trials show strong efficacy, but concerns remain about bleeding risks in older adults. A post-marketing Phase IV trial includes:

A real-world patient cohort aged 65+

Adverse event tracking over 24 months

Integration with national health registries

Signal detection using RWE tools

The result? Identification of a higher-than-expected gastrointestinal bleeding rate in patients also taking NSAIDs—leading to updated labeling and targeted educational campaigns. This not only ensures patient safety but protects the brand from legal and reputational risks.

Conclusion

Late-phase clinical trials are a vital phase in the lifecycle of pharmaceutical products—not only for validating efficacy in diverse patient populations, but more importantly for monitoring and managing risk. Through rigorous pharmacovigilance and safety monitoring, sponsors can protect patient health, meet global regulatory obligations, and support a drug’s long-term commercial success.

As healthcare systems become increasingly reliant on real-world data and post-marketing evidence, the role of safety management in late-phase trials will continue to grow. Proactive investment in robust, global pharmacovigilance systems is no longer optional—it is a critical pillar of responsible, sustainable drug development.

1 note

·

View note

Text

MMaDA: Open-Source Multimodal Large Diffusion Models

Multimodal Large Diffusion Language Models

Hugging Face introduces MMaDA, a new class of multimodal diffusion basis models, to achieve excellent performance in several areas. It strives for excellence in text-to-image generation, multimodal understanding, and textual reasoning.

Three key innovations distinguish this method:

In its unified diffusion architecture, MMaDA uses a modality-agnostic design and a shared probabilistic formulation. By eliminating modality-specific components, this ensures data integration and processing across types.

Mixed lengthy CoT fine-tuning: This strategy aims to curate a CoT format across modalities. This strategy allows cold-start training for the last reinforcement learning (RL) stage by coordinating reasoning processes between the textual and visual domains, improving the model's ability to solve difficult issues immediately.

The unified policy-gradient-based RL algorithm (UniGRPO) is a method created for diffusion foundation models. To unify post-training performance gains across reasoning and generating tasks, it uses diversified reward models.

Experimental data shows that MMaDA-8B is a unified multimodal foundation model with good generalisation. It outperforms other powerful models in several areas. MMaDA-8B outperforms LLaMA-3-7B and Qwen2-7B in textual reasoning, SDXL and Janus in text-to-image generation, and Show-o and SEED-X in multimodal understanding. These results show how successfully MMaDA links pre- and post-training in unified diffusion systems.

MMaDA has several training stage checkpoints:

After instruction tuning and pretraining, MMaDA-8B-Base is available. It generates simple text, photos, captions, and thoughts. Huggingface open-sourced MMaDA-8B-Base. Specifications are 8.08B. This process involves pre-training on ImageNet (step 1.1), an Image-Text Dataset (Stage 1.2), and text instruction following (Stage 1.3).

MixCoT (shortly to arrive): This edition uses mixed extended chain-of-thought (CoT) fine-tuning. It should have advanced multimodal, textual, and image-generation reasoning. After Mix-CoT finetuning with text reasoning (Stage 2.1), training adds multimodal reasoning (Stage 2.2). Release was expected two weeks following the 2025-05-22 update.

MMaDA-8B-Max (coming soon): UniGRPO reinforcement learning trains it. It should excel at complex logic and stunning graphics. Stage 3 of UniGRPO RL training is slated for release after the code move to OpenRLHF. It was expected one month following the 2025-05-22 upgrade.

For inference, MMaDA supports semi-autoregressive sample text production. Multimodal generation uses non-autoregressive diffusion denoising. We offer inference programs for text, multimodal, and text-to-image generation. The training method includes pre-training and Mix-CoT fine-tuning.

Research paper introducing MMaDA was submitted to arXiv on May 21, 2025. Also available through Hugging Face Papers. Huggingface and Gen-Verse/MMaDA's GitHub account hosts the training models and code. Huggingface Spaces offers a demo online. The project repository on GitHub has 11 forks and 460 stars, according to data. Python dominates the repository. Authors include Ling Yang, Ye Tian, Bowen Li, Xinchen Zhang, Ke Shen, Yunhai Tong, and Mengdi Wang.

#MMaDA#MMaDA8B#MMaDA8BBase#MMaDA8BMixCoT#MMaDA8BMax#multimodaldiffusionfoundationmodels#multimodallargemodelMMaDA#technology#technews#technologynews#news#govindhtech

0 notes

Text

How to Check Sainik School Entrance Exam Results?

Problem:

The Sainik School Entrance Exam is a crucial step for students aspiring to join a disciplined and structured educational environment. However, many candidates and their parents struggle to find accurate information on how to check their results. They often get confused by fake websites, misleading information, or technical errors on result day.

Agitate:

Imagine the frustration of eagerly waiting for the results after months of preparation, only to struggle with website crashes, incorrect login details, or unclear instructions. Many parents face unnecessary stress because they do not know where or how to access the official results. This confusion can lead to delays in further admission processes, reducing their child’s chances of securing a seat.

Adding to the complexity, students preparing under Sainik School Coaching in Delhi often expect quick and hassle-free access to their results, but lack of proper guidance can turn this into an exhausting process.

Solution:

To ensure you check your results smoothly, follow this step-by-step guide:

Step 1: Visit the Official Website

The All India Sainik School Entrance Examination (AISSEE) is administered by the National Testing Agency (NTA).The results are published on the official website of NTA: https://aissee.nta.nic.in.

Step 2: Click on the Result Link

Once on the website, look for the link labeled ‘AISSEE Result 2025’ (or the respective year). Clicking on this link will direct you to the result-checking portal.

Step 3: Enter Login Credentials

To access the results, enter the following details:

Application Number

Date of Birth (as per the application form)

Security Pin (Captcha Code displayed on the screen)

Step 4: Download and Check Your Scorecard

After logging in successfully, the result will appear on the screen. The scorecard will contain:

Candidate’s Name

Roll Number

Category

Marks Obtained in Each Section

Total Score

Qualifying Status

The scorecard can be downloaded and printed for future use.

Step 5: Check Cut-Off Marks and Merit List

Each Sainik School has a different cut-off based on seat availability, exam difficulty, and number of applicants. After checking your result, compare your marks with the expected cut-off. If you qualify, your name will appear in the merit list published by individual Sainik Schools.

Step 6: Follow the Medical Exam and Admission Process

Qualified candidates must appear for a medical examination before final selection. Keep checking the official website of the selected Sainik School for further instructions.

Case Study: Success from Sainik School Coaching Classes in Delhi

A recent case from a leading Sainik School Coaching Classes in Delhi highlights how structured preparation improves success rates. Out of 200 students enrolled in the program, 150 successfully cleared the written exam, and 120 proceeded to the medical test. This shows the importance of targeted preparation under expert guidance.

Final Thoughts

Checking the Sainik School Entrance Exam results doesn’t have to be a stressful process. By following these simple steps, you can quickly access your results and move forward with the next phase of admissions. Parents who enroll their children in structured coaching, such as Sainik School Coaching in Delhi, often see better success rates due to expert guidance and timely information.

For more updates on Sainik School admissions, preparation tips, and the nation defence academy entrance exams, stay connected with reliable sources and coaching institutes.

#Sainik School Coaching in Delhi#Sainik School Coaching Classes in Delhi#Sainik School Coaching institute in Delhi#Sainik School Coaching Center in Delhi#Best Sainik School Coaching in Delhi#Online coaching for Sainik school exam in delhi

0 notes

Text

How can enterprises achieve glue potting machines intelligence?

In modern industrial production, the glue potting process is a key link in product manufacturing, and its quality and efficiency directly affect the overall performance and production cost of the product. With the rapid development of science and technology, intelligence has become an important direction for the upgrading of glue filling process.

So, how can companies achieve glue potting machines intelligence?

1. Algorithm optimization and intelligent control

The core of intelligence lies in the algorithm. By optimizing the control algorithm of the glue potting machine, enterprises can achieve precise control of the glue potting process. Advanced algorithms can analyze the three-dimensional structure of the product and plan the best glue filling path, thereby avoiding the waste of glue and uneven glue potting.

In addition, the introduction of advanced control systems such as PLC (programmable logic controller) or DCS (distributed control system) can further improve the automation and intelligence level of the glue potting process. These systems can automatically complete the proportioning, mixing, potting and other processes of glue according to the preset program, improving production efficiency and product quality.

2. Sensor upgrade and data interaction

The sensor is the "eye" of the glue potting machine, and its performance directly affects the accuracy and stability of glue filling. Enterprises should actively upgrade sensors and use high-resolution visual sensors to capture more subtle image information to ensure that glue can be accurately poured into designated parts.

At the same time, realizing data interaction between glue potting machines and other equipment is also the key to intelligence. Through the Internet of Things technology, glue potting machines can upload working data in real time and receive remote instructions, thereby realizing remote monitoring and fault diagnosis, reducing downtime and maintenance costs.

3. Human-machine collaboration and operation optimization

Optimizing the human-machine collaboration mode is also an important part of realizing glue potting machines intelligence. Enterprises should provide an intuitive and convenient operating interface so that operators can easily monitor and make necessary adjustments to the glue potting machine. At the same time, establish an efficient feedback mechanism,

so that special situations found by operators during the production process can be promptly fed back to the intelligent control system of the glue potting machine, so that the system can learn and improve. This two-way interaction can continuously improve the intelligence level of the glue potting machine.

To realize intelligent glue dispensing, enterprises need to start from algorithm optimization, sensor upgrade, human-machine collaboration, and the introduction of robots and vision systems. As a leading enterprise in the industry, Second Intelligent's glue dispensing machine products undoubtedly provide strong support for achieving this goal.

source: https://secondintelligent.com/industry-news/glue-potting-machines-intelligence/

0 notes

Text

Top Useful Smart Appliances for Modular Kitchen

Source of info: https://medium.com/@itnseo70/top-useful-smart-appliances-for-modular-kitchen-1d2bcb1dfff6

Introduction

The best smart appliances for a modular kitchen increase performance, ease of use, and beauty. Grocery management is made easier with smart refrigerators that include touchscreen controls and tracking of supplies. While smart dishwashers offer environmentally friendly and personalized wash cycles, built-in smart ovens give quality cooking. Sensor-equipped range hoods and induction cooktops automatically adjust settings for the best cooking results. Tasks are made easier by voice-activated equipment like food processors and coffee makers. Also, smart trash cans, bright lighting, and advanced water purifiers improve performance and maintain the stylish, trendy look of a functional kitchen, making sure an advance in technology and well-organized area.

1. Smart Refrigerators

Refrigerators are no longer only cooling devices. Many of the features that smart refrigerators currently provide improve modular kitchen design. They can link to your smartphone and have voice assistants and touchscreens. Cooking instruction, recipe suggestions, and grocery tracking are all available. These freezers often have simplified designs that fit in smoothly with your modular cabinets, maintaining the appearance of structure.

2. Built-in Smart Ovens

For people who like to bake or prepare delicate meals, a built-in smart oven is innovative. These ovens provide modern benefits and save space by fitting in with the cabinets in your modular kitchen design. It is equipped with smartphone controlling features, self-cleaning functionality, and pre-programmed recipes. This way you can be sure that all your food is cooked as you want it to be-through remote preheating on your oven or an application tracking the cooking process.

3. Smart Dishwashers

Busy homes benefit greatly from smart dishwashers. They include features like energy usage tracking, wash cycle customization, and remote monitoring, and they are made to fit nicely below your kitchen’s work surfaces. Some different versions even include sensors that may identify how dirty your dishes are, allowing them to automatically change the detergent and water levels for the best possible cleaning. Your kitchen will always be a peaceful place because of its whisper-quiet functioning.

4. Voice-Controlled Range Hoods

Maintaining the purity of the air in your kitchen requires a range hood, but smart range hoods go one step further. These devices, which have sensors and voice control, can automatically change the fan speed in response to the strength of cooking smells. Their modern, simple style keeps your area relaxing and free of smell while balancing the simplified look of the modular kitchen.

5. Smart Induction Cooktops

The effectiveness of induction cooktops has already made them popular, and smart versions of this technology make them even better. Because they have app connectivity, you can use your phone to find recipes, set timers, and adjust heat levels. A clean look can be achieved by the easy installation of smart cooktops into adjustable kitchen counters. Their energy-saving settings and child-lock safety make them suitable for modern houses.

6. Wi-Fi Enabled Coffee Makers

A coffee maker with Wi-Fi is a smart device that is important for coffee lovers. You can set these machines to brew the coffee of your choice before you enter the kitchen. They will easily fit into your modular kitchen because of their small size, freeing up counter space for other activities. Also, a lot of versions include brew settings that can be changed to suit any taste.

7. Intelligent Food Processors

Any kitchen needs a food processor, and smart models make meal preparation even easier. They are able to finely chop, grind, and combine items thanks to voice commands, pre-programmed operations, and app connectivity. They are perfect for modular kitchen because of their small size, which guarantees that they provide maximum use without taking up a lot of space.

8. Smart Microwaves

Even though microwaves have long been commonly found in kitchens, smart microwaves are changing their usefulness. These appliances provide voice control, customizable cooking modes for different recipes, and scan-to-cook technology. Their simplified, combined designs provide an attractive and well-organized appearance when used with flexible kitchen cabinets. To make sure you never cook your food, you can even remotely monitor your cooking.

9. Advanced Water Purifiers

Nowadays, water purifiers are more than simply functional appliances. These days, smart water purifiers are equipped with features like UV cleaning, app connectivity, and real-time water quality monitoring. These purifiers, which are made to fit in well with flexible kitchen cabinets, provide clean drinking water while maintaining the kitchen’s overall attractiveness. To make sure best performance, some models even alert you when it’s time to replace the filters.

10. Smart Trash Bins

One creative innovation to modular kitchen design is the use of smart garbage cans. These bins, which are equipped with motion sensors, open and close automatically in favor of comfort and hygiene. Recycling is made easy with certain versions that have waste-separation sections. Their simplified style guarantees that they fit a modern kitchen’s design as well.

11. Smart Lighting Systems

Even though it’s not a traditional appliance, smart lighting is important for improving the functionality and environment of your modular kitchen. You can create the perfect setting for dining, relaxing, and cooking with the help of adjustable brightness and color settings. Smart lighting solutions, which can be controlled with voice commands or a smartphone, give your kitchen an updated and energy-saving touch.

The Benefits of Smart Appliances in Modular Kitchen Design

There are many benefits to creating a modular kitchen with smart equipment. First of all, by automating routine activities, they save time and energy. Second, a free and messy and attractive kitchen is guaranteed by their stylish and small designs. Finally, by improving resource use, smart appliances support a healthy way of life and are a great long-term investment.

Conclusion

An important step toward a more effective, enjoyable, and trendy cooking experience is the inclusion of smart equipment into modular kitchen layouts. These products, which range from advanced water purifiers to smart refrigerators, are designed to improve usefulness without losing style. You can transform your cooking spaces into a place of comfort and innovation that is suited to the needs of modern life by choosing the correct smart equipment.

1 note

·

View note

Text

KMSPico Activator Download for Windows & Office: A Comprehensive Guide

Discovering kmspico download Windows 10 was like finding the missing puzzle piece for my PC setup. At first, I hesitated, unsure if it would deliver on its promises, but the seamless experience quickly put my doubts to rest. The site’s user-friendly design made everything crystal clear, and the download process was as smooth as silk. Not only did it simplify my activation needs, but it also saved me from unnecessary hassles—talk about a game-changer! If you’re searching for a solution that truly works without the fluff, this is the one to trust. It’s not just a tool; it’s peace of mind for your system.

KMSPico is a popular tool used to activate Windows and Microsoft Office products without requiring a valid product key. If you're seeking a way to activate your Windows or Office software legally and for free, KMSPico has gained considerable attention due to its effectiveness and simplicity. In this article, we will explore everything you need to know about KMSPico, how to download it, and how it works.

What is KMSPico?

KMSPico is an activator for Windows operating systems and Microsoft Office applications. Developed by Team Daz, it allows users to activate Windows and Office products with just a few clicks. This tool utilizes the Key Management Service (KMS), a legitimate activation system from Microsoft that is typically used by large organizations to activate multiple devices. However, KMSPico makes use of this system for individual users to activate their software without having to buy a product key.

While KMSPico is not officially endorsed by Microsoft, it is widely used for personal, non-commercial use. It can activate nearly all versions of Windows (including Windows 7, 8, 8.1, and 10) and Office (2010, 2013, 2016, 2019, and 2021) without any hassle.

Features of KMSPico

KMSPico offers several benefits for those who need an easy and effective way to activate Windows or Office:

Free Activation: One of the main advantages of using KMSPico is that it provides a completely free way to activate your Windows or Office software. This is especially beneficial for users who don't want to pay for expensive product keys.

Simple User Interface: KMSPico has a very simple interface, which makes it easy to use even for beginners. You don’t need any technical expertise to activate your software with this tool.

Safe and Secure: Despite being a third-party tool, KMSPico is considered to be safe to use, provided you download it from trusted sources. It doesn’t come with any malware or viruses when downloaded from a reliable website.

No Need for Product Keys: With KMSPico, you don’t need to enter a product key. The tool takes care of the activation process automatically, making it very convenient.

Works on Multiple Versions: KMSPico supports a wide range of Windows versions, including Windows 7, 8, 10, and even the latest updates of Windows 11. It also works with all major versions of Microsoft Office.

How to Download and Use KMSPico?